so, exactly what was gained ? I’m not sure I understand what you’ve done here

Beginning of accelerated desktop… I think?

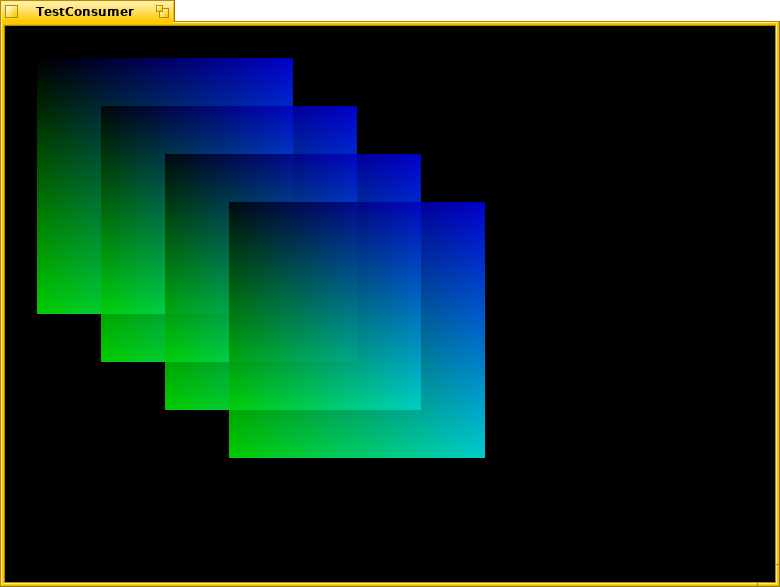

Initial version of compositor is working:

When implementing protocol, I experienced deadlock problem when 2 connected nodes are handled in the same thread. Synchronous message sending to BHandler in the same thread locks forever. I made some workaround;

static status_t SendMessageSync(

BHandler* src, const BMessenger &dst, BMessage* message, BMessage* reply,

bigtime_t deliveryTimeout = B_INFINITE_TIMEOUT, bigtime_t replyTimeout = B_INFINITE_TIMEOUT

)

{

if (dst.IsTargetLocal()) {

BLooper* dstLooper;

BHandler* dstHandler = dst.Target(&dstLooper);

if (src->Looper() == dstLooper) {

// !!! can't get reply

dstHandler->MessageReceived(message);

return B_OK;

}

}

return dst.SendMessage(message, reply, deliveryTimeout, replyTimeout);

}

I implemented compositor protocol and now clients from separate processes can be connected and disconnected.

very well done,would you consider a bounty or contract to work on 3d drivers ?

Donates are accepted here, it can speed up development.

About bounties: it should be asked to Haiku inc., not me, I am not official Haiku development team member and I have no decision power. I also have full time job and I am not sure if it can be combined.

Next plans (approximate):

-

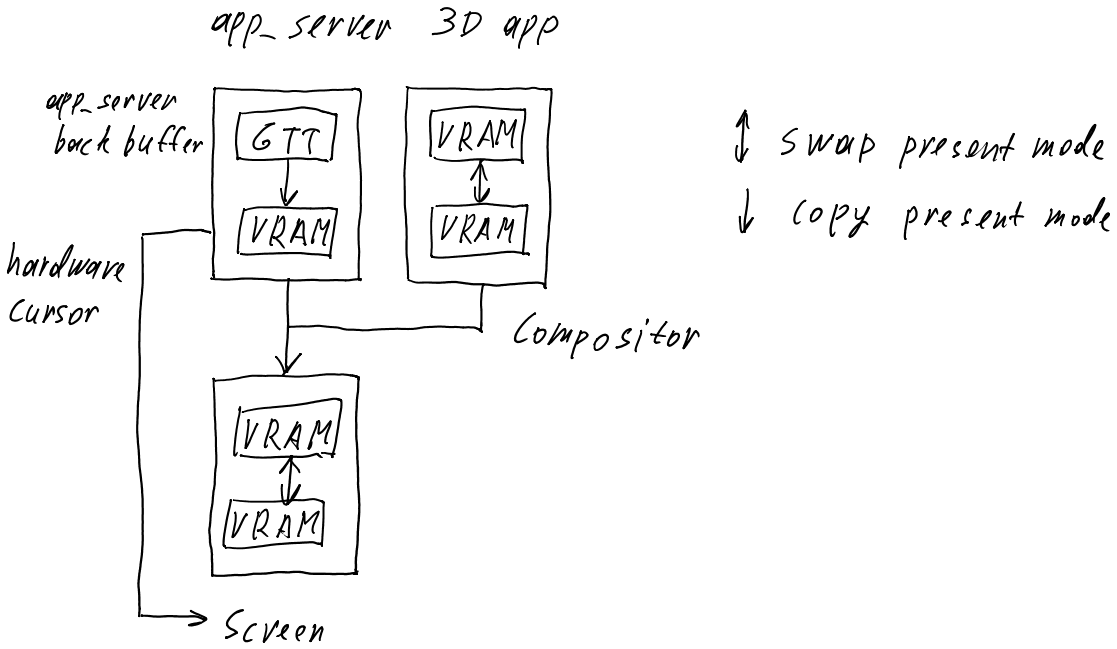

Implementing copy swap chain present mode. It is needed for app_server.

-

Integration with app_server by implementing CompositorHWInterface. test_app_server can be used first.

-

Compositor surface window based on BWirectWindow (for getting clipping information from app_server).

-

[optional] Semitransparent windows based on BDirectWindow with modified region calculation.

-

Mesa3D OpenGL integration.

-

Software Vulkan rendering integration.

-

VideoStreams graphics card management API (VideoPorts) and multiple monitor support.

I will note here for users’ sake that while some of the API research in here is interesting, this is mostly orthogonal (i.e. related but not contributing to) 3D acceleration. That is, some of these APIs might be useful in a world where Haiku has 3D acceleration, but none of them are actually prerequisites for or actually contribute to 3D drivers.

Compositing is generally done because hardware accelerated graphics makes it relatively cheap and it makes other things (e.g. desktop shadows) significantly easier. It does not need to be done to get hardware accelerated 3D graphics, to be clear; I would expect that a first iteration of hardware acceleration would not touch app_server’s core drawing code at all.

I further note (as I have discussed with X512 elsewhere), that I’m not actually sure if the VideoStreams API discussed here has relevance anymore with the introduction of Vulkan WSI. These days, Vulkan and drivers want to do nearly all buffer and copy management themselves based on direction by the Vulkan API consumer. This is all specified in the Vulkan APIs, and while some of it requires interfaces with the windowing system, most of the internal buffer management that X512’s “VideoStreams” seems to supply is, in my understanding, entirely done by and with Vulkan and then the GPU drivers.

(Indeed, OpenGL leaves a lot more to the individual window system here, but if the future is entirely Vulkan, designing, implementing, and then supporting an API that largely has relevance only for OpenGL may not make a lot of sense.)

Haiku inc also has no decision power on the development. If someone else decides to set up a bounty, that’s fine.

Ultimately the development team (not Haiku inc) decides what is merged or not. It already happened that some work paid by Haiku inc was rejected by the development team and eventually rewritten. But of course Haiku inc is now asking the developers before setting up a paid contract, so that it doesn’t happen again, because it’s a bit embarrassing for everyone.

Donation link is broken.

Here correct donation link for X512: PayPal.Me

Chugin Ilya is his name? Just to be clear, thx.

Yes, my name is Chugin Ilya (surname, first name order).

If you’d like to donate to the Haiku Project overall (that PayPal link is to donate straight to X512) - you can go here:

https://haiku-inc.org/donate

I implemented vsync in AccelerantConsumer by using retrace semaphore and made video file producer. Now video plays without tearing and frame skips. Tearing can be globally eliminated when VideoStreams will be integrated with app_server.

Also I implemented variable buffer count in swap chain FIFO. Confirmed to work with 32 buffers in swap chain. I made swap chain more compatible with Vulkan. I plan to make VulkanProducer WSI surface Vulkan API extension so Vulkan can render to any VideoStreams consumer in any address space.

That’s awesome, amazing work

Plan of integrating app_server with VideoStreams and RadeonGfx. test_app_server on second monitor can be used for testing.

No, WSI is just set of standard API hooks that should be implemented independently for each OS and windowing system. Surface creating API is purely OS depending, there are no generic create surface function, only multiple per-OS.

See Vulkan sample code.

For buffer sharing in Vulkan dma-buf fd is used on Linux. You need to pass it explicitly to Vulkan API.