ah that looks great!

User should be able to use the color picker in the sample video on the street/paved road (to look for the average grey-color) which should be the usually grey (50%) to adjust the gradient of the video!

ah that looks great!

User should be able to use the color picker in the sample video on the street/paved road (to look for the average grey-color) which should be the usually grey (50%) to adjust the gradient of the video!

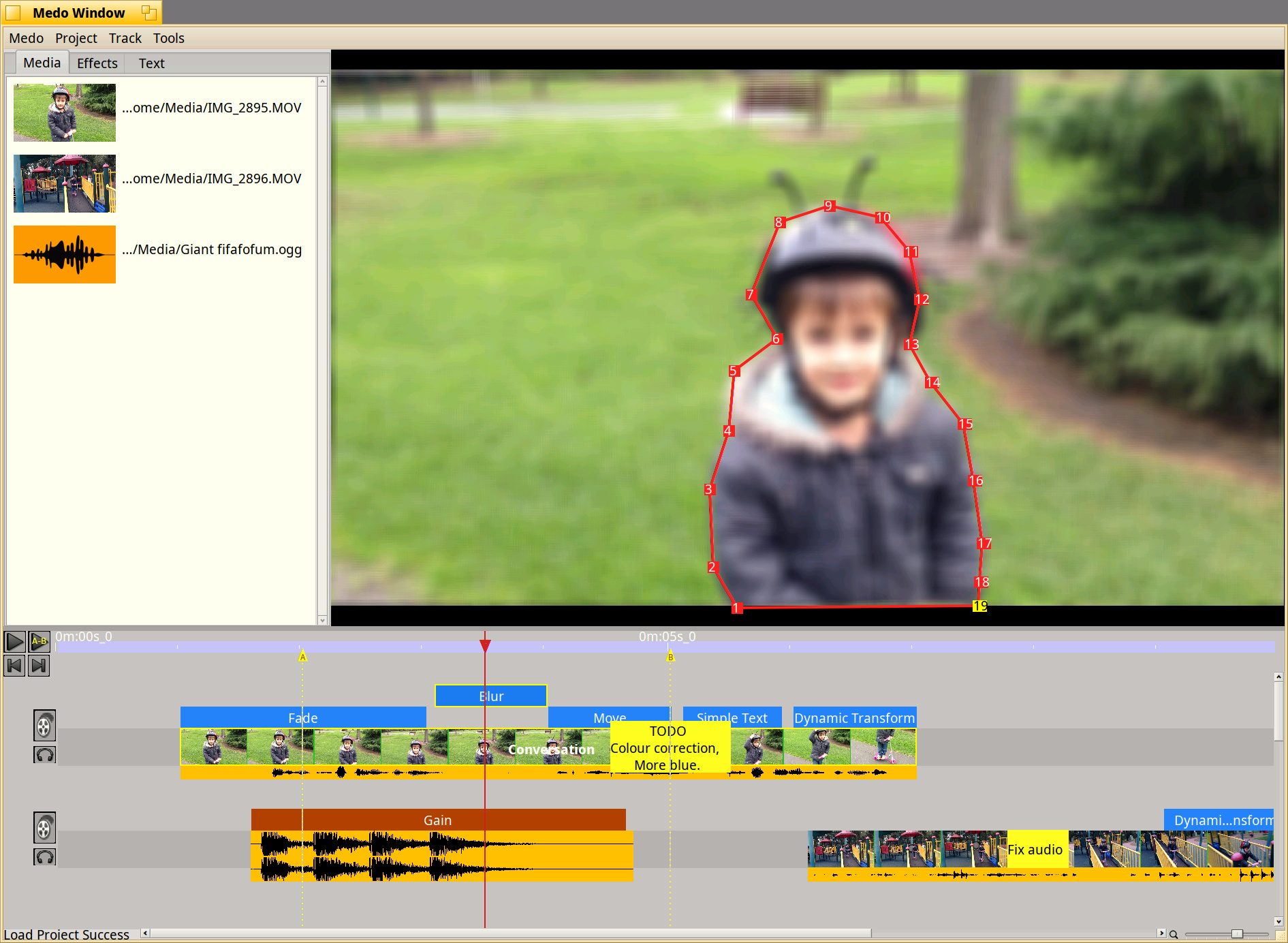

Not much development happening due to other commitments, but I had a couple of hours this week to develop a GUI outliner control (path/shapes) with resizable handles. The idea is to define a mask/stencil which can be applied to effects (eg. blur, cut, colour correction only on mask).

And this immediately introduces the requirement to have a keyframe motion tool to move the associated keypoints. This will take quite a bit of time to do …

Anyhow, monthly screenshot below.

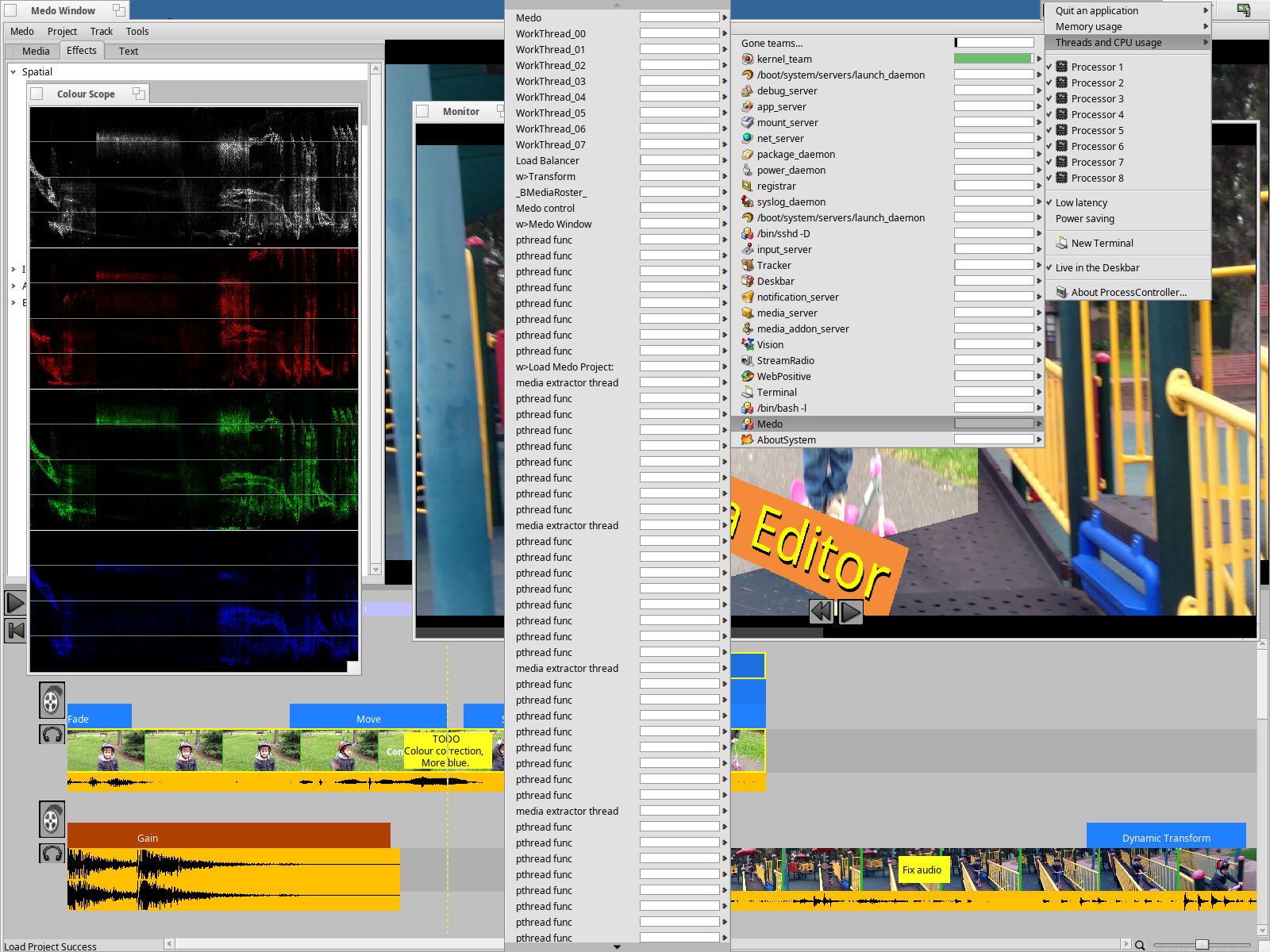

Testing threading behaviour on Haiku R1B2 - I think I have too many threads  Time for ThreadRipper …

Time for ThreadRipper …

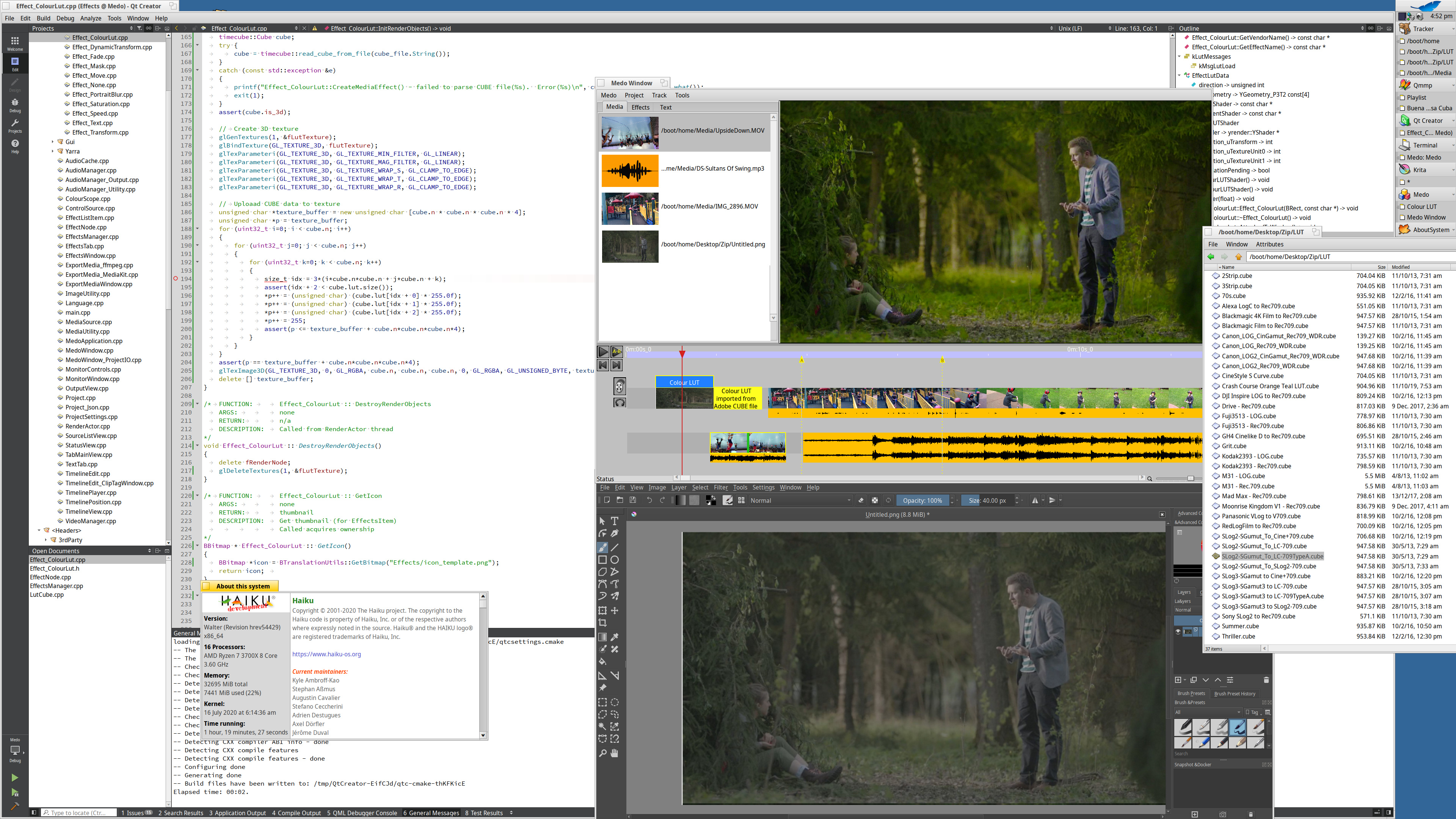

The big-boy Video Editors allow setting colour LUT (Look Up Tables) to enhance the video colours and provide cinematic visuals. I’m sure most of the YouTube reviewers would immediately lament about how incomplete a video editor is if it doesn’t support colour LUT’s. After a little bit of research, I realised that this feature wouldn’t take long to support.

I rolled up my sleves, spent an hour to parse the Adobe CUBE file format, another hour to support the new rendering feature, and finally an hour for the GUI. So in a few hours of work, I managed to add support for Adobe CUBE files, which are essentially supported by all the big-boy video editors.

The screenshot below shows the original image file (below), with RAW camera colour space, and the image in the video editor has the LUT applied. 2Mb image attached.

Still on track to release with R1 final.

Wow @Zenja! I think you’re on track to create Haiku’s first “killer app”! Keep up the amazing work!

Nice progress, Zenja!

I took the liberty to adjust the thread title to be more informative.

Keep up the great work, @Zenja !

Some suggestions:

Multicam editing: nowdays almost essential even for “consumer use”, but many open NLEs still don’t support it. Here’s an interesting post about: http://russandbecky.org/blog/2014/02/25/multicam-editing/

If you can multicam then autosynch tracks - by audio aligning - would be really great. Some time ago I discovered this cool project: https://github.com/protyposis/AudioAlign

Hope may inspire your work…

where can i test it?

Still have tons of bugs to fix, features to add, it is not ready for regular users. You only get to make an initial impression once  It will be Open source when I release it. Expect it to be ready the same time R1 final is released.

It will be Open source when I release it. Expect it to be ready the same time R1 final is released.

For example, today I was fixing a bug with multiple LUTs and dynamically adding/removing LUTs which are cached. The buggy version would overwrite the LUT for an unrelated clip since they were shared. It’s little bugs like this which drive the user crazy and make them distrust the program. I cannot ignore the known bugs. I expect to spend 6 more months on fixing the known bugs, so dont expect anything in 2020. All my headers have 2021 copyright

But I don’t want to wait another 10 years… ![]()

![]()

![]()

No problems, meantime I’ll continue to use Windows + Vegas.

10 years?  Was honestly hoping R1 would be out in 2022 (2021 for beta3)

Was honestly hoping R1 would be out in 2022 (2021 for beta3)

There are more than 1000 open bugs in the R1 milestone. There are about 1000 in the beta2 milestone, which was in development for about a year.

So, 2 years sounds possible if we look just at that. However, new tickets keep being added as we progress, so it may take a bit more time. I think R1 has been around 1000 tickets for a long time now and not really going down all that much.

And also people really want us to add 3D acceleration and other things like that which would delay things by a few more years… We’re trying to avoid that too, so we can ship something

Just a question: are you developing it on top of (platform indipendent) libs or every function is integrated inside the NLE itself ?

Another - hopefully inspiring - idea: what about online collaboration between users ?

There was some interesting DAW projects that had this feature (but unfortunally abandoned, such as Koblo) or new-approach “online jamming software” such as Online Jam but - in any case - this feature could really help teams of creators to follow (and contribute) the workflow even from remote.

C++17, Haiku (BeOS) API for GUI + messaging + Media Kit, OpenGL 3.3 + GLSL for effects, ffmpeg for encoding and audio, Actor Programming model. It’s a Haiku native / original application, ridiculous number of threads, drag and drop everywhere. QtCreator, Haiku Debugger, jamfiles, Tracker Grep, Krita, Web+. I custom built my home PC to be Haiku compatible, it’s actually my default / primary boot system.

Still need to figure out how to capture voice recordings, audio effects, and then add 100 or so visual effects before release.

After release, I’ll investigate adding After Effects type capabilities for R2.

@Zenja, please also add a retro style counter bar, like in FPX, but late 80s style, blending into Haiku interface.

@Zenja kudos!

I like Kdenlive but can you look at Lightworks? For some inspiration? For me audio level adjusting is more precise and intuitive in Lightworks than Kdenlive.

You asked for it:

I’ve added a teletext type feature, as well as incrementing counter (dates / time / money). Also, in the background you can see JSON file for dynamic GLSL shader effect. A shader effect can be downloaded from websites like ShaderToy and tweakable parameters can be modified from a dynamic GUI.

Getting there …

I am so loving that this is going to be the iMovie (or maybe more) of Haiku. Another suggestion, maybe move the play/pause buttons on the left, to the video window, under the video frame? Like in iMovie or Final Cut Pro. The buttons just look cramped in there.

Also still missing the general project time counter inside the application :D. It can be placed just above the timeline, and it would be a nice area to house additional future features.

How many video/audio tracks it supports right now?

Awesome. Keep going!

But can I ask? Why you don’t continue work on Clockwerk? And started from scratch your own Medo project? Just interesting.