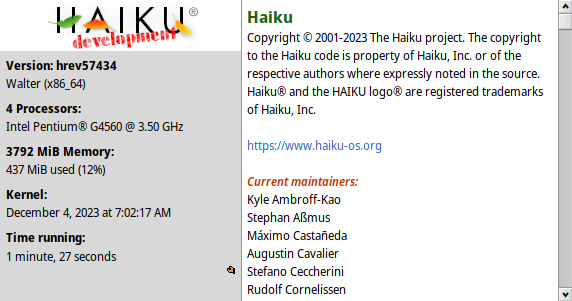

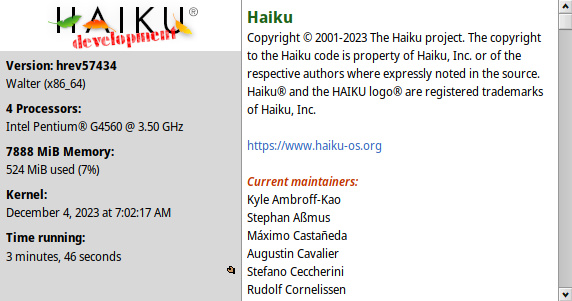

Here are two pics. Everything is identical, except the amount of RAM. An 8 GByte stick instead of a 4 Gbyte stick. As shown, it’s using LESS of the 4Gb than it is, of the 8Gb. Shouldn’t it be using the SAME amount in both cases? Haiku needs what it needs. It shouldn’t care how much RAM there is, above and beyond that, unless specifically told to USE more. But it’s acting like, because there is twice as much room, it can stretch out a little bit more than before. But WHY? It can’t be purely based on percentage of available RAM, because it’s using 7% instead of 12%. But it’s also not using all the RAM it NEEDS with 4Gb, because then the number should remain the same with 8Gb.

1 Like

There is no sense in wasting RAM,

So if there is RAM that is unused, it will be used as much as possible to cache things.

That is an over simplification. It could easily have used as much as it NEEDED (assuming 524Mb were that) with 4Gb, but it didn’t. And it used MORE with 8Gb. WHY? At what amount of RAM will it stop seeking more to use? 16Gb? 32Gb? 64Gb? Just curious, mainly.

I’m not sure where I heard this, but I think there is something that is using ALL the rest of the RAM, but is not shown in AboutSystem.

EDIT: Found it!

1 Like

Thanks for the education! I love learning new things in areas that interest me.

The system needs to track what each page of RAM is alnocated to. Currently, these pages are 4KB each. So we’re talking one or two million pages here. It turns out, tracking so many things does require some RAM since we have to store that data somewhere. Even if the data for each page is just a few bytes, this immediately turns into several megabytes of RAM used… just to keep track of what the RAM is being used for.

One solution would be to use larger “pages” (for example, Apple M1 machines will typically use 16KB pages) so there are less of them to track. On Linux, this can be done but only at compile time. Having variable page size (decided at boot depending on the available memory) is quite a bit more complex. And also, larger pages means you can’t allocate less than one page to anything, so there are places where only a smaller chunk of memory is needed, and the remaining part of the page will be wasted. That explains why the pages remain relatively small to avoid wasting more than a few kilobytes in such situations.

There are other factors, for example, more things will be moved to swap if you have less RAM.

7 Likes