This is what I see too. Vague enough that they can be wielded as the wielder sees fit. It’s very worrying.

Some of my analysis is derived from my previous post here, which does include a source written by a UK lawyer specialising in tech law. But again as in that post, it does not qualify as legal advice whatsoever anyway. You will have to trust my qualified (but not officially binding legally) sources on this, at your own risk.

For the record, I am advocating for a more careful approach than ignoring the OSA or assuming that Haiku, Inc. is exempt from the law. I would think that this would need slightly less due diligence on sourcing as it is a risk-averse way, yet as of time of writing proponents of risker approaches have yet to come forward with sources of similar quality supporting them.

I remain of the opinion that we can hang our hat on not having a “significant” number of UK users. 12k registered users - not all active, and a minority in the UK - make us a minnow. Over the first year or so of the new regime we will see if anyone is getting prosecuted, and benefit from the formation of case law.

I would be interested to know haw many of the Haiku committee that decided to bin off UK forum users were actually from the UK. I guarantee if you have UK members they would be a lot more cavalier because they know the state things are here. British enforcement bodies are hollowed out and do not have the bandwidth to prosecute any but the most egregious offenders, and I doubt offcom will be any different.

Rivers in the UK are contaminated by sewerage because the water boards are not being prosecuted for spills. Ride a bike and the potholes will buckle your wheel, until a car hits you which is quite likely not to carry valid insurance because basic policing is underfunded.

My background is in town planning and, if you build something without planning permission the council do not just turn up with a bulldozer to knock it down. They start by inviting you to seek a retrospective planning permission, and if that is refused you can appeal, and if the appeal is unsuccessful there is the option of law courts. This process can go on for years, and the defendant can at any point cut their losses and remove the unlawful development - as indeed Haiku can with its forum. What is the process that may lead to prosecution in the currently discussed instance?

I absolutely agree with this. I think your own statement is what’s key here though: “Over the first year or so of the new regime we will see if anyone is getting prosecuted, and benefit from the formation of case law.”

We don’t want to be who forms the case law.

Thanks for the previous answers to my questions. I’d appreciate if you could clarify the Haiku Inc’s position on the questions 3 and 4 (reaching out to Ofcom for the guidance regarding the significant number of users, and refraining from geo-blocking the users from the UK until you get the guidance).

Absolutely. I’m not sure what “Ofcom” is however. Would their response indicating we are safe override any legal responsibility in the UK?

Ofcom, or the Office of Communication, is the UK’s government-approved regulatory and competition authority for the broadcasting, telecommunications and postal industries of the UK. Quoting the Wikipedia: “It has a statutory duty to represent the interests of citizens and consumers by promoting competition and protecting the public from harmful or offensive material”.

Wrt the Online Safety Act, Ofcom is the primary regulatory authority responsible for enforcing the requirements of the OSA, as well as for developing guidance and code of practice for online platforms:

So yes, their response would determine if the OSA applies to our forum and if so, in what way.

People in the USA aren’t responsible for enforcing other countries’ laws. We aren’t even responsible for helping our own law enforcement if we don’t want to. If they want to prevent their people from seeing something or reading something, that’s their business. That’s the problem you get when your country has no real right to free speech.

For the people saying we can’t ignore OSA, if we aren’t actively offering thing in the UK, then I think we can ignore it. If you want to geo-block it, then I say just do it and then it’s out of our hair. If I make a blog page about my cat, it’s probably available in the UK. It’s not something I did, it’s just the way the internet works. It’s not my job to be the UK’s police, nor is it Haiku’s

Follow up question… how do we contact them? There are no digital contact methods listed that are relevant to the OSA:

Exactly!

The UK is trying to do the same thing as China with the great firewall, but just want to bully the websites to do that for them…

I never seen something like that…

I’m French, so I’m accustomed to stupidity, but this, this is baffling me.

This rings very true. Despite the potential for abuse of vaguely worded legislation, the UK government only puts effort into something because (1) it will generate revenue or (2) it’s a flagrant (obvious) abuse of the rules. The resources don’t exist to do more. The law is about pornography and internet safety for the most part. They will put some effort into making sure large sites adhere, and adult sites. There will probably be no resources to pursue small unrelated forums based outside the UK, when there is no gain to be made, no real risk to leaving them alone, and it will be hard to win anyway. However, I would still be concerned and want some kind of a plan in place if I was running such a forum.

That’s a good question. There is this mailing address:

Online Safety Policy Delivery Team

Ofcom

Riverside House

2A Southwark Bridge Road

London

SE1 9HA

During the Call for Evidence stage of Online Safety regulation they mentioned the following email: os-cfe@ofcom.org.uk , but I’m not sure if it’s still active.

However, I’ve just found there’s an online tool provided by Ofcom to check if the Online Safety Act applies to a service. It can be accessed from here: New rules for online services: what you need to know - Ofcom (the direct link is Ofcom Regulation Checker), and once started it contains a lot of useful info.

The first question there is: “Does your online service have links with the UK?”, and in the More Information section we can read the following (emphasis mine):

…

The UK is likely to be a target market if you direct your service towards UK users in the way you design your service, promote your service, receive revenue from your service, or in any other way.

…

Even if the UK is not a target market, your service will still have links to the UK if any part of it has a significant number of UK users.

…

The Act does not set out how many UK users is considered “significant”. You should be able to explain your judgement, especially if you think you do not have a significant number of UK users.

If you answer to the question above with “No” (i.e. the online service does not have links with the UK) then the tool immediately ends with the statement:

Online Safety Act is not likely to apply to your online service

If you answer “Yes” then you’ll have additional five questions and based on my answers that applies to this forum (user-to-user service, no porno content, no exemptions etc.) the result will be:

Online Safety Act is likely to apply to your online service, or part of it. Based on your answers, your online service (or a relevant part of it) is likely to be a user-to-user service.

In that case it also suggest to “Check how to comply with your illegal harms obligations. You can use our step-by-step guide to understand what you need to do next”, which leads to the following page: Check how to comply with the illegal content rules - Ofcom , which reveals that the guide is not ready yet:

In early 2025, we’ll be launching a tool to help you check how to comply with illegal content duties.

The tool will be divided into four steps that follow Ofcom’s risk assessment guidance. Following these steps will help you to comply with the illegal content risk assessment duties, and the linked safety duties and record-keeping and review duties.

The UK’s law enforcement and courts not being very effective at the moment isn’t a solution, in fact it’s a large part of the problem here. Because if the system was effective and applied the law consistently, then when a law was too harsh, everyone would notice and take issue, and the law would be replaced rapidly as a result. But a harsh law loosely enforced (if at all) just lurks until someone decides to weaponize it.

Selective enforcement of laws, where the selective enforcement is entirely at the pleasure or whims of the enforcers (rather than enshrined in law itself), is just a “soft” kind of tyranny. Because then you don’t really have a country with “rule of law”, you have a country ruled by the whims of those who hold public office, who use the laws as justifications for whatever they were going to do anyway.

And Haiku, Inc., it seems, would just rather not play that game. The odds that the law would get applied to the Inc. are low, but if it’s not really up to the laws but up to the whims of the “ruling class” then, in fact, there’s nothing we can reasonably do to prevent such action being taken against us, and no guarantee that it would be reasonable if it did. The Inc. itself is a USA-registered nonprofit corporation, but some of its officers live in the E.U., and cross-border traffic is far from zero in either case.

Well according to their compliance tool, it seems like Haiku fits the bill. We don’t seek out people in the UK. They just happen to be able to access the site. And we don’t have a significant portion of UK users. Leave it at that and we are fine. Not fine? Geo-block and done with. Let our UK people figure it out.

Do we have a tutorial or some advice how to use and get VPN working on HAIKU?

I have no idea what it is and how to get it work!

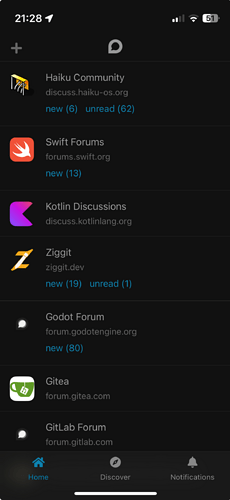

The funny thing is every other Discourse site I have a login to has yet to even mention this at all.

It is quite a few. I have a number because of work, haiku is the main one I read regularly for fun though.

Have you read the Online Safety Act? Have you read what OFCOM says about it? Have you read what the UK government says about it?

The answer is NO to all questions because if you had done any of the above you would not have written what you did write.

This is what the government says:

The Online Safety Act 2023 (the Act) is a new set of laws that protects children and adults online. It puts a range of new duties on social media companies and search services, making them more responsible for their users’ safety on their platforms. The Act will give providers new duties to implement systems and processes to reduce risks their services are used for illegal activity, and to take down illegal content when it does appear.

Haiku is not a social media company or a search company.

The Act is aimed at protecting children and vulnerable adults from exploitation, in particular by companies involved in pornography, and in companies like Facebook and Twitter.

The UK is not like the USA. The authorities are not going to waste time on small websites that aren’t involved in areas of concern.

In fact, it is most unlikely that the Act would apply to Haiku as there are very few UK users and the site is not directed at the UK.

Tomorrow I shall write to Ofcom and seek their advice on whether Haiku needs to do anything at all. I shall publish their answer here.

For those who don’t know, OFCOM is the Office for Commmunications, which oversees the internet, amongst other things. Only OFCOM would bring a prosecution, and the chance of that happening is vanishingly small.

Finally, I know Volodroid has already posted this link, but if you missed it, here it is again:

UK government explanation of Online Safety Act

I don’t know how many monthly active users from the UK we have on this site. I can make an educated guess it’s less than 1000, and my IANAL assumption that is far from significant number, but let’s just assume for a moment that we do qualify as a service that have link with the UK. Then according to the Quick guide to illegal content risk assessments - Ofcom we suppose to do the four steps:

Step one: Understand the kinds of illegal content that need to be assessed

Step two: Assess the risk of harm

There’s a list of 17 kinds of priority illegal content that need to be separately assessed. Ofcom provides the Risk Assessment Guidance and Risk Profiles document. Ideally we should end up with being self-assessed as a low risk service, as defined by Quick guide to illegal content codes of practice - Ofcom :

If your service is low or negligible risk for all kinds of illegal harm, we propose to call it a ‘low risk service’ and the minimum number of measures will apply.

Step three: Decide measures, implement and record

The code of practice document provides a list of measures, for a low risk service these would be:

Governance and accountability

- Individual accountable for illegal content safety duties and reporting and complaints duties (document code: ICU A2)

Content moderation

- Having a content moderation function to review and assess suspected illegal content (ICU C1)

- Having a content moderation function that allows for the swift take down of illegal content (ICU C2)

Reporting and complaints

- Enabling complaints (ICU D1)

- Having easy to find, easy to access and easy to use complaints systems and processes (ICU D2)

- Appropriate action for relevant complaints about suspected illegal content (ICU D7)

- Appropriate action for relevant complaints which are appeals – determination (services that are neither large nor multi risk) (ICU D9)

- Appropriate action for relevant complaints which are appeals – action following determination (ICU D10)

- Appropriate action for relevant complaints about proactive technology, which are not appeals (ICU D11)

- Appropriate action for all other relevant complaints (ICU D12)

- Exception: manifestly unfounded complaints (ICU D13)

Terms of service

- Terms of service: substance (all services) (ICU G1)

- Terms of service: clarity and accessibility (ICU G3)

User access

- Removing accounts of proscribed organisations (ICU H1)

Many of the points above are already implemented as part of the Discourse service, the others would require providing more information to the users, or making a governance assignment. Overall, it doesn’t look like a tremendous amount of work to do.

Step four: Report, review and update risk assessments

That’s an easy one:

We recommend that services report their risk assessment outcomes and online safety measures to a relevant internal governance body. For small services without formal boards or oversight teams, this can simply mean reporting to a senior manager with responsibility for online safety.

I’ve read through the mentioned 17 categories and the example cases provided in the Risk Assesment Guidance document, and atm I’m confident we would self-assess our forum as low or negligible risk for all kinds of illegal harm mentioned in that document.

Btw, the examples in that document finally mention some numbers. With the lowest numbers of 5’000 or 10’000 monthly UK users, the doc places services mentioned in the corresponding examples into the low risk bucket:

A health charity has a website which shares information about a particular illness. Part of the website is a forum where users can describe their experiences and offer support to one another. It has 10,000 monthly UK users. Users can share images, text and hyperlinks, and respond to each other’s comments.

People at the charity review all content and comment boxes, after it is uploaded by users. This includes checking any links that have been included. It also has a complaint system and looks at any complaints promptly.

The service has a few of the risk factors for CSAM URLs and image-based CSAM. However, as the service reviews all content shared by users, it would be likely to know if there had ever been CSAM images or URLs on the service, and there has never been any. The provider therefore concludes the service is low risk for CSAM URLs and image-based CSAM.

To sum it up, even in case the OSA does apply to our forum, that doesn’t look like the end of the world to my IANAL eye. There will be some work to be done, but at the moment I don’t see why the amount of that work should justify banning all of our UK users.

Thank you for the link. That is such a well-written document. I should have thoroughly read it before doing my own research, it would have saved me a lot of time. But hey, it was fun to do, and it’s nice to see my findings and conclusions align with Mr. Garett’s summary which covers the topic comprehensively.

That’s also very useful info, but the link is a bit off, as it should point to the next post in the thread there: New OfCom Rules - #3 by HAWK - Support - Discourse Meta

There is no monthly static I can check, but the average for daily engaged users is about 45. Assuming thosse would all be unique (which they aren’t), that’d be around 1400 users per month. that’d require 60-70% of the forum to be from the UK to match your number then, though in reality the daily engaged users are more the same people than different people overall.