I agree in the sense that if someone wants something better, they should do something new instead of removing the teapot. However, moving or changing things is one step closer to removing them completely. Any moves should be a separate application. Any changes should be a setting you can turn on, no a forced change.

I wonder if changing the horse or the cat with the teapot could solve this funny argument, achieving a small but nice benchmark. Moreover, a tool that could evolve into a real benchmark by adding modular components. (Like Berometer)

#SaveTheHaikuTeapot ![]()

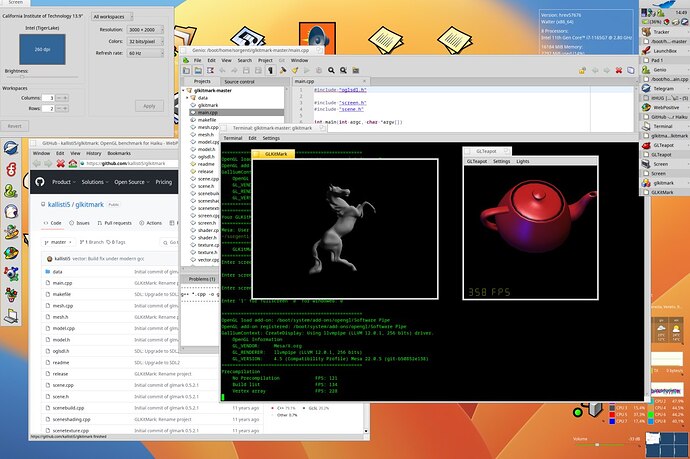

I’ve been hearing this GLTeapot is not a benchmark now for around 20 years… maybe longer. Even before I had heard of Haiku.

What about extending GLTeapot a bit, to make it into somehwhat of a benchmark, by applying some heavy shader code similar to Furmark so we could have GLFurryTeapot etc… as a menu option so people could use it as a basic benchmark because as long as it is there this question is going to persist.

It would be funny too… what more reason is needed? Something like this:

- GLTeapot demo, Ref (Timeline: 8:10-8:30/15:45): I Built a Modern BeBox with HAIKU - YouTube

- GLTeapot demo, Inside Haiku Apps - Episode 43 - The GLTeapot Demo (youtube.com)

I agree, but it is fun to see what FPS numbers Youtubers/content creators get on various computer builds.

Various users like to see fast their computers can push pixels like the software rendering of their GLTeapots on Haiku - without loading up some AAA+ game or “real” benchmarking tool.

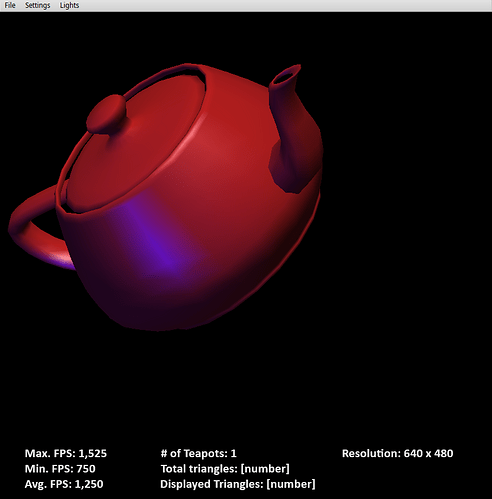

And this is why I advocate to modifying GLTeapot to being able to display the maximum/minimum/mean FPS as static numbers. That way you’re not trying to read a bazillion different numbers jumping around, up and down, in one display, so you can’t even SEE what the maximum framerate is. It’s frustrating right now, because I can only guess that it’s something around 1,500fps, but I can’t be sure.

But I also don’t know exactly how many triangles (maximum/minimum/mean) it’s displaying. I’d like to know what it’s pushing, just to know. Even information for information sake is still information.

Thanks for working on the patch. I’m happy to test it ![]()

![]()

I do enjoy folks saying “HAIKU IS SO FAST! I GET THOUSANDS OF FPS!!” in youtube videos lol.

But… but… with a couple tweaks, we’d be be able to state EXACTLY how fast we’re spinning teapots! And, can you imagine the sheer wonder and amazement, when we accomplish 1,500fps with 1,000 teapots all spinning at once? No, seriously… I’ve actually spawned so many Teapots, it’s almost a round SPHERE! Sure, it crawls at about 1-2fps, but, still!!! ![]()

Here is my concept for a revised GLTeapot demo, which shows more info, in a way that is actually more useful and less frustrating (i.e. easy to read). Now, question is, how much would I have to pay someone to implement this? ![]()

The Total/Displayed triangles would be the same, if Backface Culling is disabled, as the number of triangles never changes. When Backface Culling is enabled, the number would fluctuate accordingly. This could be further refined with a Max/Min triangles displayed, which would remove numeric fluctuation.

With changes in resolution (window size) and other options, you now know exactly how many FPS you’ll get on a given processor via software rendering. This may also change with versions of Mesa and/or revisions of Haiku or other optimizations/improvements made over time.

Obviously, I’m not expecting or asking anyone to take away time from more important Haiku-related tasks, but as time or interest permits.

I don’t understand why the teapot suddenly is a problem. What difference does it make to remove it?

BeOS provided it as a GL API demo that is the only reason it is there.

Also I dislike adding more stats without actually making it into a real benchmarks its 100% pointless. It’s basically mores stats about nothing.

There are 3 versions of the GLTeapot model form the Utah GL project around 3k 20k and 140k triangles. All of which are so simplistic that they could get multiple frames per second on the most complex one even on mid 90s accelerators (eg 300SX circa 1994)

Even the very fist PC 3d graphics accelerator the Glint 300SX could render the 3k model at around 100fps, the 20k model at around 15fps and the 140k model at around 2fps. IT could only do 300k gourad shaded triangles per second if you ignore fill rate it might not have hit those rates in practice (it likely could hit the 15 and 2fps rates for the more complex models).

The Geforce 256 could probably handle any of those models at over 100fps and would already have been fully fill rate limited… that is just how bad this benchmark is. (circa 2000)

Even the weakest GPU on sale today is stronger than any of those by orders of magnitude.

If someone wants detailed benchmarks, it’s probably not what they want. But it’s a demo. Its for fun, for nostalgia, and for the many Haiku users who enjoy using it.

If it’s not for you, then don’t use it. But it’s most certainly not for you remove. It’s not hurting anyone so let us use what we want to use. (The royal ‘you’, not pointed at you specifically.)

But yes, I just wanted to remove the cap. Didn’t know that would inspire people with ideas to remove the teapot.

Ok, let’s realize something. We ONLY have software rendering right now, which is CPU-bound, correct? If we choose to play or develop 3D games, we should know how many triangles can be rendered with particular effects, shouldn’t we? To say “more stats about nothing” is to say knowing more about what our particular setup can do is meaningless… period.

And then you go off the rails, talking about hardware accelerator cards. Cards we DON’T have and CAN’T use in Haiku. THAT is information that is useless to us, because we CAN’T use THOSE cards in Haiku, so why even mention them?

Unless your attack is on the Utah Teapot project itself and how simplistic is it. Ok, so? Which version of the Utah Teapot does Haiku use? 3K, 20K, or 140K triangles? At least that’s information we can use… assuming GLTeapot adhered to one of those three versions and not a variation between one of them.

Yes, GLTeapot is not a game, so what it tells us is not an ACTUAL performance metric in an actual scenario. But, it gives us SOMETHING to work with. We can start to define a pathway for others to say, if you have THIS processor in a similar setup, you can expect to get THIS amount of performance in a game if it’s pushing THIS many triangles with THESE features enabled. There will always be variations, of course, because not everyone uses their computer in the same way. Plus, if we add features to GLTeapot in the future, such as collision detection and physics, that brings us that much closer to actual gameplay parameters, which further refines the information we are provided.

For example, I installed both Lugaru (a game I bought for my Windows system many years ago and played) and Nanosaur (a game I played briefly on the iMac in stores and on my Mac computer at home). Those are actual 3D games! And they both run decently on my computer (I think Lugaru is a better use-case, as it involves rag-doll physics). But I don’t know how many triangles Lugaru is pushing or how many fps I’m getting on my particular system. Or how many cores it’s using or anything like that. If I can play it smoothly on my computer, I don’t know anything about WHY. I don’t know how much FURTHER I could push things, til the frame rate dropped to an unacceptable level.

But getting back to GLTeapot. It exists in Haiku. It gives us options/settings we can change, Those changes do affect performance, to one degree or another. Particularly, if we start spawning more and more teapots. Why have ANY settings/options, if NONE of it matters at all? It has to mean SOMETHING, even as a graphics demo. If all GLTeapot did was spin a single teapot (speaking of which, why was there a change in the way it spun? It spun in a kinda figure-8 way originally. Why was that changed?) and there were no fps display or settings/options you could change, I’d argue it’s a nice distraction, but utterly pointless otherwise. But it’s more than just that. And it can be more than it currently is.

GLTeapot has become a type of mascot for Haiku. No other OS I have ever used, ever had such a thing. Not macOS, not Windows, not Linux/Ubuntu/whatever. It not only looks cool… it has features you can change and that actually affects performance!

I just want to see GLTeapot become more than it started out as. To become more useful and more informational. Why is that a bad thing?

SGI Irix also had several OpenGL demos like “Ideas in Motion” and “Atlantis”. Don’t quote me on it since I never owned an SGI workstation, but I believe they came with the default install.

Let’s also remember that those were SGI workstations… specifically tailored for graphics. Of COURSE they’d have OpenGL demos and such! Those systems were made MAINLY for that! ![]()

Software rendering of GLTeapot is STILL memory bandwidth bound on pretty any computer from the past decade.

I mean seriously a 3Ghz Pentium 4 has no problem rendering 140k triangles lots of times per second to the point that it has nothing to do with the computational speed of the CPU but is almost entirely how many times can we copy this buffer to the other per second. The point I was trying to make with all of this… is GLTeapot was never written as a benchmark, its a now vintage API demo, since day one that people have missused as a benchmark.

If you want a benchmark as I already said lets make it that… but today it is NOT a benchmark of anything other than buffer copy speed. It isn’t even a good exercise of the modern GL APIs either, like at all…

Also I’m not attacking anything… lets not go there.

Ok, how do we make GLTeapot an actual benchmark, then? What would we have to change/improve in order to do that?

I was simply trying to offer a way that we’d be able to get more useful information from running GLTeapot than just a framerate indicator that is either locked to 60fps or unleashed and you have to try and guess just how many fps you’re actually getting, because the number is changing so fast.

Please don’t. GLTeapot is not supposed to be a benchmark. It is supposed to be a simple OpenGL demo for Haiku, lets keep it this way.

Ok, we already KNOW Haiku can do OpenGL. Now what? Watch GLTeapot a few more times? The novelty of GLTeapot as “just” a graphics demo has long since gotten old. It’s there because it’s there. But I want it to represent more! It’s not that it can’t, it’s that people are resistant to the idea. Why, I do not know. Why does GLTeapot HAVE to be just an antiquated graphics demo? It shows framerate for a reason! It has options for a reason! So, why not expand upon that? Shoot, make a fork of it and call it “GLTeapot: Benchmark Edition”, for all I care! But I want this “cool demo” to showcase more than just “Oh, that’s nice… next!”

I mean, I’ve downloaded Lugaru and Nanosaur for Haiku, to actually push my system a bit (needless to say, Lugaru is maxing out all of my CPU’s cores/threads (just standing there doing nothing but bouncing on my toes). Gotta try setting everything to minimum and see if that helps). Why CAN’T we have something else to interest/entertain us in Haiku?

Demo Crews created demos to showcase what a computer was capable of. And those days were fun! Where is that same sense of adventure these days? We are essentially in the same place as the Atari ST was back in the 80’s. Demos pushed the 68000 CPU and Video Shifter farther than the ROM-based TOS ever would. Why can’t we do something similar… just to say we can? Give people who see Haiku for the first time something to wow them. Be Inc. did it back in the day with their bouncing ball demo (with the ball going from one box to the other) and their videos running in a flipbook and on a cube, etc. These may be yawn-inducing in Windows/macOS… but Haiku is NOT macOS/Windows. It’s the spiritual prodigy of BeOS!

Can we not produce a video of Haiku doing all sorts of things at once, like Be, Inc. did with BeOS? Can we not optimize things, so all those things going at once, use as few resources as possible? Can’t we create a Haiku that actually EXCITES us, like BeOS once did?

I think we CAN… but whether or not we DO, is up to those willing to “showoff” Haiku rather than just create/use Haiku as just another OS doing the same things in a slightly different way.

It will be not so obvious after introducing hardware-accelerated drivers, multiple screen, GPU support etc… It can be used for basic diagnostics that everything is working fine and on correct GPU.