It’s taken a while but I’ve done a lot to tweak the original concept and am hoping if the pics don’t take up too much server space, to post revision2 of this concept to everyone

A lot more things have been added into this in the past 10 days as well, such as finally having a first run, and since there’s so much to talk about, that’s what I’ll post in its own part:

Part 1/2: First run

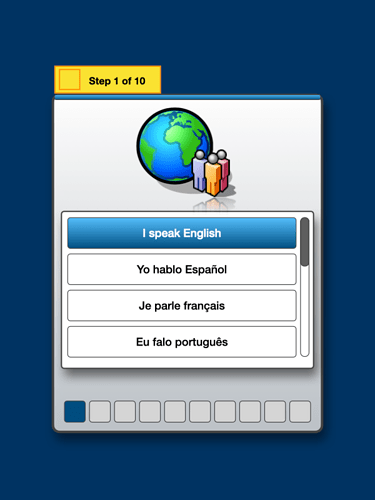

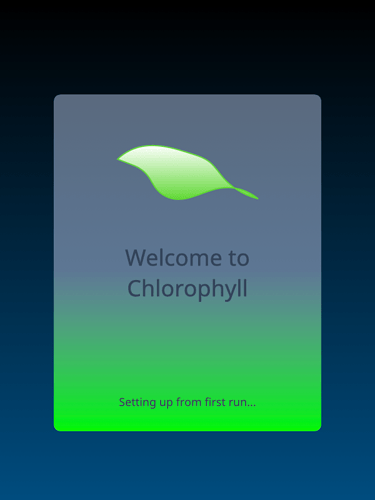

This borrows a lot from the Welcome/First Run ideas that I did back in June, so for those that saw that, this is roughly the same with some improvements to it. First Run starts by showing a quick intro (and I’m not quite sure what the animation should be yet).

After this, the next step would be to select a language. Because I only speak English, only English is in my concept shell so far; the other options are just placeholders at the moment…

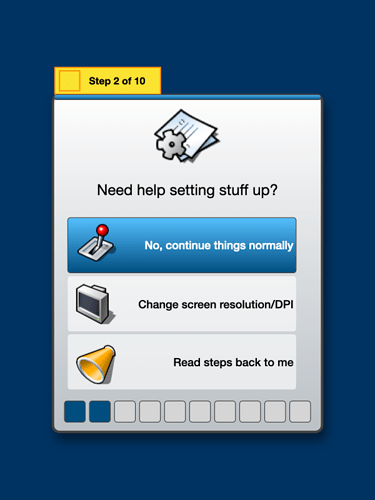

And now that the assistant would have an idea how to localize stuff, the next step is to ask if the screen resolution/DPI needs to be changed, or if someone needs to hear what’s on the screen (using Festival once this goes beyond a concept).

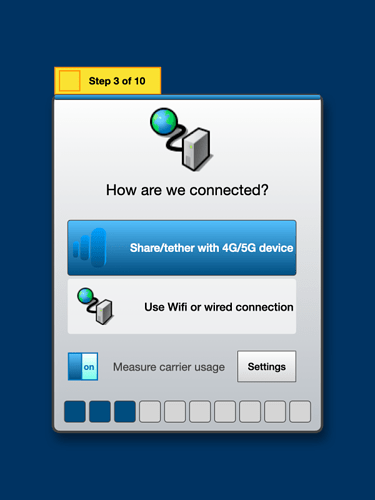

The third major part of First Run now that we would have a working screen and language is to get connected to the Internet; everything I’ve been messing around on has been on macOS and Devuan, which both support iPhone tethering; I’m not sure if Haiku does this yet (although I think I remember reading it supports Android tethering I think)?

Otherwise, the Chlorophyll shell would just connect to a Wifi network (wpa_supplicant) or Ethernet using DHCP when it was actually finished.

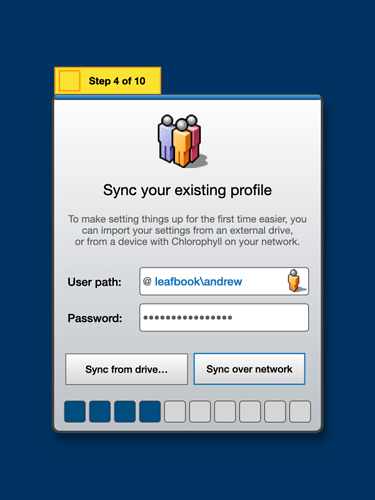

Now, for those who remember the previous setup concept, I imagined syncing profiles with the Haiku forum creds which I realize is just a dream (so that’s been scrapped here). Realistically, we can sync profiles across machines on a network or we can also import settings from a USB drive or something.

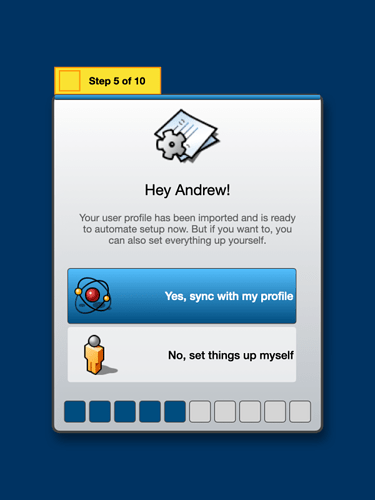

From there, if the import is successful (and I imagine that it would be really quick, since it’d just grab preferences files, not home/user data), the idea is to sync with a profile and skip the rest of everything!

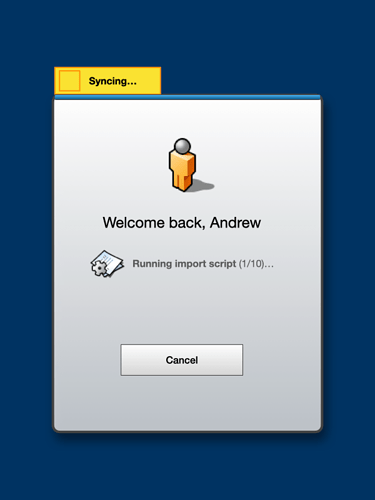

I’m thinking the feedback for this could look something like this while import scripts run:

But if someone doesn’t have everything nicely imported, then we’ll need to continue on

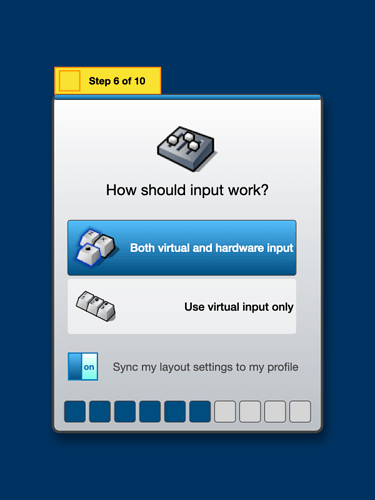

Mobile hardware can have both hardware and virtual keyboards, and accept multiple inputs like keyboards, mice/trackpads, and joysticks, so we can choose to have the system accept these out the box or just focus on virtual keyboards alone, which would be useful on a phone where it needed to be isolated and not accept external devices. We could import the keymap data from the user’s profile as well.

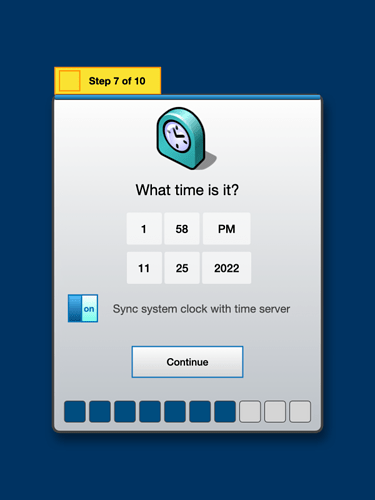

Again, time zone data should sync over and hopefully the time is right when things get set up, but we can set the time as well here.

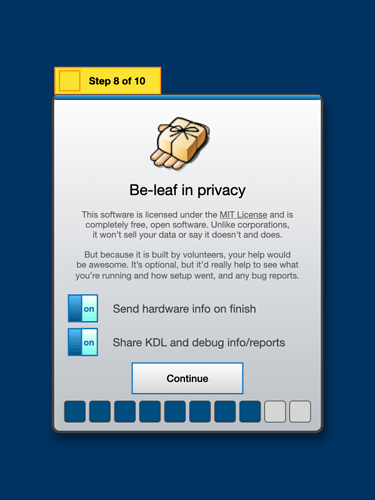

From here, especially if someone is new to the free/open source OS world, there’s a card that explains it and also allows us the option to share the hardware info and how setup went, as well as crash and debug info:

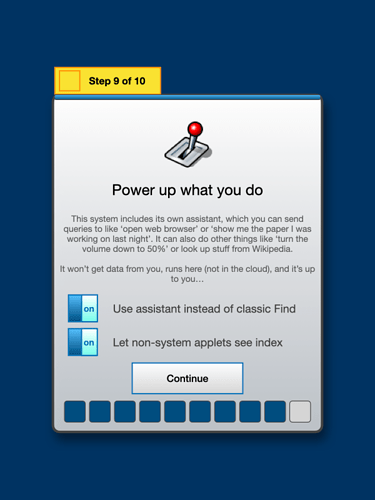

We would also have an option for a virtual assistant that would be integrated into the Leaf menu:

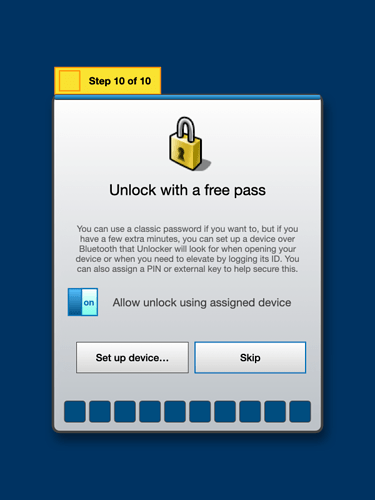

And finally, because custom builds of mobile tablets or phones cobbled together from parts probably won’t have technology like Face ID or Touch ID built in, we could use Bluetooth proximity paired with a device ID to quick unlock the device after setting it up.

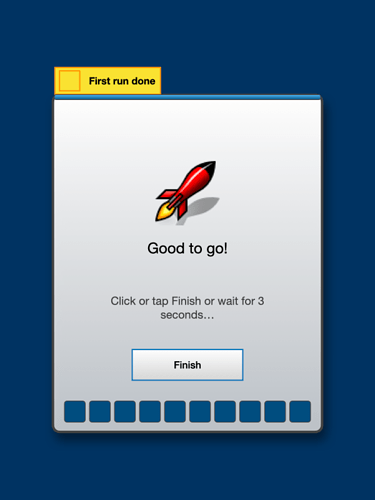

Once everything was done, First Run would automatically quit in a few seconds or someone could click Finish.

Something I feel missing from FirstBootPrompt and from the world of Be as a whole is some sort of welcome to the user interface (beyond like a welcome page, which isn’t the same), this would add that:

And that’s what First Run/Welcome setup for Chlorophyll would look like! I’m hoping that this Glass Elevator idea makes its way into Haiku, both in the classical desktop version and in mobile devices (whether that’s done by me finishing this someday or someone else doing it).

But let’s get to what I imagine the main UI would look like!