This seems to make sense.

I think that one of the best advantages of haiku in its “slow development” is that you can see the mistakes that others have made and this helps to choose cleaner and smarter approaches.

Design by committee, on steroids. linux has this disease.

I’d put the hardware drm in it’s own accenerant, and don’t be afraid to create more accelerants for each card manufacturer. In fact, I’d break the driver stack up into accelerant and accelerant add-ons wherever possible. There is a pervasive effort to put to much hardware coverage in each driver. It’s ok to hard a radeon, radeon hd, Radeon 560 etc. Same for all drivers. Better to have a simpler driver than try to mske one driver fit all that is fragile to other hardware compatibility changes. Fix one card, break another etc.

I imagine there is a need for a kernel level driver for certain card functions, but that should be minimal at all costs. Again, if there’s lits of ifdef going on, break into hardware families.

Ah ok. I asked because you stated you have the intel hardware driver for vulcan ported. I assumed that this would contain most of the hardware (register-like) acess stuff. Do you have the source online somewhere? I am interested to have a look at that.

BTW In the old days I had a 3D driver created for nVidia, built hardcoded inside our MESA lib, which simply used a Haiku (BeOS) accelerant clone. Some simple sync stuff I did via the shared_info memory. So I was wondering if most of the hardware related stuff is already inside the vulcan driver, wouldn’t it be possible to add the rest to the accelerant which you can then just clone for the needed functions?

Consider me a newby on this stuff, sometimes just trying crazy things to get stuff going (at least in the past  )

)

Oh on a sidenote: I just got my hands on a intel 6600k - i 5 CPU and mainboard with intel graphics. I’ll be creating a system with that so I can finally do some work on the intel driver for more recent systems (2015 in this case)

Intel Vulkan driver sources are located at vulkan « intel « src - mesa/mesa - The Mesa 3D Graphics Library (mirrored from https://gitlab.freedesktop.org/mesa/mesa). Or you mean all patches to make driver compiled and loaded? Multiple parts are needed to use driver:

libvulkan.so: it loads driver add-ons and provides public Vulkan API to applications. Source code is located at: GitHub - KhronosGroup/Vulkan-Loader: Vulkan Loader.- libdrm. Contains wrapper over DRM ioctls. All used functions for Radeon Vulkan driver are listed here. Intel Vulkan driver is almost not using libdrm, it use ioctls directly. Source code: Mesa / drm · GitLab.

- Mesa Vulkan userland drivers. Source code: Mesa / mesa · GitLab.

- WSI add-on for rendering to a surface (window etc.). Source code: Mesa / vulkan-wsi-layer · GitLab.

Would it be something too complex, to speed up the mesa (vulkan) by having them directly access the hardware registers of the gpu (knowing that they can be referenced by the linux DRMs source code) instead of trying to port the linux DRMs?

I mean, by writing something designed exclusively for HAIKU.

(an abstract part for all gpus and a part specifically for the various hardware gpus)

it probably needs someone who has skils on designing gpu drivers. (hehe)

So these could be added to our kernel gfx driver (if not fully inside the accelerant)? I like the idea of ‘almost not using DRM’ since that sounds to me, as a newby, like: less work todo (?)

In case of the nivida driver: it worked more or less like this:

- the 2D accelerant also inited the 3D engine registers needed to render

- I created DMA access (earlier already): the circular command buffer for 2D/3D rendering with the GPU resides in main system memory, the GPU was instructed to auto-fetch and execute the commands (also important back then because of the AGP system and it’s bottlenecks).

- In order to make this work there were 8 (?) DMA ‘channels’ which you could setup for specific commands. The 3D add-on (so clone accelerant with internal 3D functions, but besides that a normal clone), but also the 2D accelerant (was also accelerated back then, using of course the same engine as the 3D add-on) dynamically re-assigned these channels depending on the engine command they needed executed.

- the same and only DMA command buffer was used for both 2D and 3D accelerant intermixed.

If you look at nouveau and it’s development you see that they took the same approach and incorporated it much further (and much more detailed and sophisticated), now even for ‘simple’ things like modesetting. All control now goes via DMA and this command buffer.

Which for me means that in order to make newer cards work with my older driver, I need kind of ‘reverse engineer’ which registers are actually accessed via the DMA buffer as my driver still directly programs that (speed is not important here).

You write ‘directly accessing registers’, but if all is right, for 3D at least, this is never done. you just have 'structs in memory which holds the stucture that the engine needs it’s data to be formatted in, and the GPU fetches it from this command (and data) buffer and dumps it on the registers, in batches even.

I’m a bit rusty on this stuff, and of course it’s info that I gathered some15 years ago, so I might be (way) off for these days, and for other brands than nVidia. Looking at the nouveau driver though I still recognize the structures more or less which makes it probably doable to at least reverse some stuff from that to benefit the current nvidia driver.

OK, don’t know if I really answered the question. The above stuff anyway just pops up in my head

Yes, it would be nice if it is possible. Some kernel driver → accelerant trampoline (port_id etc.) may be needed because some of logic needed for implementing ioctls is located in accelerant. I am not sure how difficult to implement it, you have more knowledge on this subject. @waddlesplash is skeptical about implementing DRM ioctls in existing Haiku drivers.

Why not turn ioctls into it’s own accelerant , outside the kernel ???

Can be also possible, but need more patching libdrm and Vulkan userland drivers (especially Intel).

Would reduce need to muck with haiku kernel, and allow for other linux drivers to more easily be ported. Accelerants are cloneable iirc. Just put nerded functions in the ioctls accelerant, like futex

Also at least to get started, acceletants outside kernel gives a layer if protection vfrom kernel being take ln down with a code errors.

If you want a really crazy idea, use hardware virtualization, put the rntire linux kernel in a accelerant, use it as a virtualized slave, sounds difficult as hell, but would guve haiku a low maintenance driver structure to rely on

Just some random things that come to mind here:

-

While I do understand the risks of putting to much functionality in the kerneldriver, I think for some functions we should allow that anyway (futex I read somewhere for instance?), but keep it simple and as much as is possible in the accelerant, even if that means (not too much) creating some special stuff based on the linux kernel variant: as long as it doesn’t take too much work to move that to userspace.

-

I think it’s perfectly OK to extend/modify the accelerant we have: the 2D hooks can be gone I guess, and all acceleration can be done via the 3D route in the end (but should work for more than a single output windows then of course).

-

Using IOcontrols is slow AFAIK which means you want to do it minimally for 3D acceleration.

-

Using 3D acceleration ‘per definition’ (I’d say) means we lose some security. It’s not possible to doublecheck each action being taken since that will be a large speed penalty to the acceleration we’d net have.

-

All the more reason of course to keep error prone stuff in userland.

-

Speaking for myself: I am not that interested in not having it in kernelspace temporary if that means we’d have acceleration in no time. Once that works it can be finetuned later. Just a hack solution at this point I’d find better than no solution at all: since it delivers us knowledge and we’ll quickly find out where risks and bottlenecks are when rearranging it then.

-

I hope I make a bit of sense… As you probably gathered, I’m no architect

From libdrm sources:

/* The whole drmOpen thing is a fiasco and we need to find a way

* back to just using open(2). For now, however, lets just make

* things worse with even more ad hoc directory walking code to

* discover the device file name. */

There are no such things in Haiku as device major/minor number. libdrm need some rework to be actually usable on Haiku.

hehe the funny paradox would be that you go and rewrite an “haiku version of drm” and then the linuxians see it and take it as a starting point to do a more decent rewrite

I started implementing my own libdrm.so without major/minor and KMS stuff. I implemented some stub graphics card device and drmIoctl() so Radeon Vulkan driver detect stub graphics card and attempt to initalize. Currently it fails because of unknown GPU family ID.

libdrm_amdgpu.so is used without modifications.

> /Haiku/data/packages/Vulkan/build.x86_64/bin/gears

drmGetDevices2()

drmIoctl(3, 0xc0406400)

DRM_IOCTL_VERSION

drmIoctl(3, 0xc0406400)

DRM_IOCTL_VERSION

drmIoctl(3, 0xc0406400)

DRM_IOCTL_VERSION

drmIoctl(3, 0xc0406400)

DRM_IOCTL_VERSION

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_ACCEL_WORKING

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_DEV_INFO

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_READ_MMR_REG

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_READ_MMR_REG

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_READ_MMR_REG

/Haiku/data/packages/libdrm/drm/builddir.x86_64/install/share/libdrm/amdgpu.ids version: 1.0.0

drmGetDevice2()

drmFreeDevice()

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_DEV_INFO

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_UVD

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_UVD

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_UVD

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_UVD

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_VCN

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_VCN

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_VCN

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_VCN

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_UVD

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_VCN

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_FW_VCN

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_VRAM_GTT

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_VRAM_USAGE

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_VRAM_GTT

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_VIS_VRAM_USAGE

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_VRAM_GTT

drmIoctl(4, 0x80206445)

DRM_AMDGPU_INFO

AMDGPU_INFO_GTT_USAGE

amdgpu: unknown (family_id, chip_external_rev): (0, 0)

drmFreeDevices()

drmFreeDevice()

Fatal : VkResult is "ERROR_INITIALIZATION_FAILED" in ../base/vulkanexamplebase.cpp at line 926

gears: ../base/vulkanexamplebase.cpp:926:bool VulkanExampleBase::initVulkan(): res == VK_SUCCESS

Kill Thread

It’s certainly more complicated than that but if it proves good real Linux people will not trip over their own pride

The card uses Atonbios “or they used to”, the mode setting driver calls into the atombios, the mode setting driver should have this information already

If you don’t know, atombiis is the gpu bios subsystem,

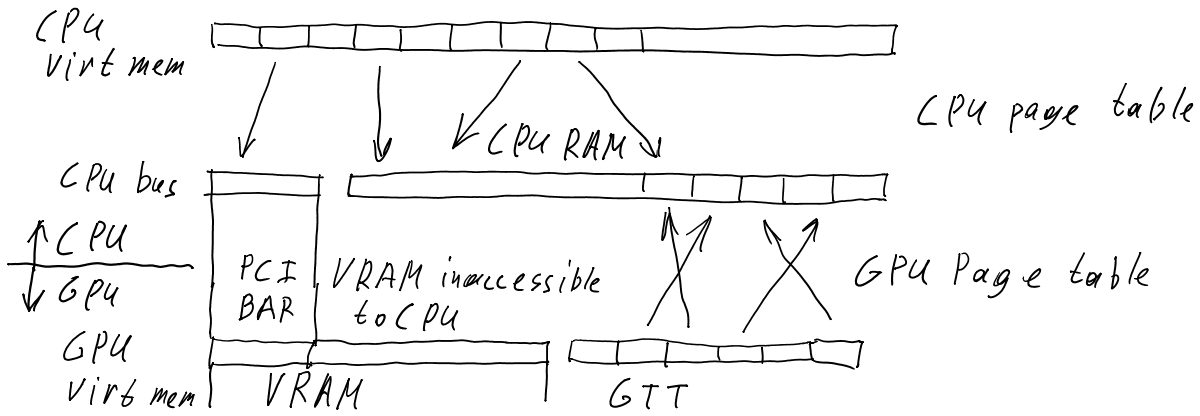

Radeon video memory structure if I am correct. GPU have its own virtual memory and page table like CPU.

That sounds about right. Especially in Vulkan you don’t have the driver do memory management between the two. You can pretty much allocate the entire memory range and do your own memory manager on top of it.

I do believe that is correct. Atombios is called during boot, and bring up gpu 3d accel. kalisti5 should be able to help here.