That ranking also looks quite concerning to me.

Uptime Kuma is simply a status page software that regularly checks if the server is working,why is this causing most of the traffic? ![]()

You should really find out if it’s an official Haiku thing,and if not,block it.

Search engine traffic is expected and nothing to worry about.

SEO bullshit seems like a waste of traffic,I’d block it.

Social media garbage may come from previews when someone links to Haiku,makes sense.

And then AI garbage,even if it’s only a minor annoyance for now,nobody consented to having their posts stolen and it wastes resources on donation-powered servers,so I’d also block that.

On the one hand I agree, I don’t really want my stuff be used to train an AI. On the other hand we can’t effectively prevent this with forum posts that are, well, public. Even if we block everything we think is an AI trainer, impersonating googlebot or another search engine would not be that hard, and archives of this page are bound to land in random places. I did however already present a list of crawlers I want banned, just that I can’t add this myself so will wait for a forum administrator to do this for me.

I don’t care at all who uses my code or how it is used. If my code is useful to someone, that’s great! And it doesn’t matter whether it’s a human or an AI. You can even take my code and pass it off as your own, it will remain on your conscience, nothing more. I don’t care. Why? Because I write programs for fun and if someone else can use it besides me, it’s good.

According to a Net search: " AI-powered coding assistants can provide intelligent code completion, flag bugs and errors, offer suggestions for improvement and translate from one programming language to another in a matter of seconds . Some can even generate code automatically, and offer code explanations in plain language."

Haiku needs ported apps, and uses Haikuporter to do this. But you could get an AI assistant to convert code from one language to another that’s suitable to run on Haiku. You could also train the AI assistant on the Haiku API. So, eventually, the work of Haikuporter could be undertaken by an AI assistant in much quicker time (no concerns about excessive energy consumption) - with a human just needed to fix up some things to make it run fine on the OS. And soon you would have an R1…

Codeim is considered the best free (for personal use) AI assistant. It can be run in an IDE or a Chrome-based browser.

If we make an abstraction from “intelligence”, AI is just another tool in human hands, which if used wisely can do something worth it. I myself would like to see AI working on drivers for Haiku, 3D acceleration and such low-level stuff.

Personally I’m not a fan of so-called artificial intelligence, especially of the large language model variety. They’re extremely inefficient and rely extensively on ingesting a lot of human-made content with questionable consent (from creators) or even potentially illegal license violations. LLMs in particular are basically just word regurgitators, lacking actual understanding present in true intelligence.

As shown in this thread, supposed artificial intelligence is unable to create code outside of what little of the Haiku API is present in AI models. It is unable to infer other possible ways to use the Haiku API, based solely from its limited knowledge. Frankly out of all the things currently accelerating the end of our world, this alleged artificial intelligence hullabaloo seems rather pointless.

My comment was about stuff people post and say in the forums, not about open source code I write ; )

But you do post publicly on forums, don’t you? Then what’s the problem?

Publicly available doesn’t automatically mean things don’t have licenses and you can do whatever you want with it.

Many forums specify Creative Commons licenses that require to credit the original author when using something,some even forbid derivates.

Unfortunately our forum doesn’t specify any specific license yet,but considering the increasing danger of abuse by AI companies,it would probably be a good idea to add a license.

In robots.txt you have to set specific rule for each bot.

Most of the search engines one are reasonable by default and will have a crawl delay of a few seconds (so they would request one page every few seconds).

The AI ones decided to have no delay at all by default. Some of them even ignore robots.txt completely. I don’t know if that is “evil”, but it is certainly not well-behaved. On my personal server, I have banned those from scanning anything. Like I do when an human decide to make a mirror of my entire website using a website mirroring tool (that ends up going through an HTML rendering of every version of every file in my Git repository browser, when they could get that through a simple git clone in a few seconds).

If I don’t ban them, it causes significant downgrade to my internet connection and increase of my electrivity bills as the poor server is trying to handle all these requests. And even if I didn’t care about reuse of my code, I do care about this.

So I have to put my copyright and license on every word I say anywhere? I’m glad I don’t care about that at all.

robots.txt supports wildcards, you can set crawl delay for all bots or for specific ones.

If we add a more specific rule the line needs to be repeated, but for ai ones that would probably just be telling them to buzz off.

Many AI firms have crawlers that just ignore robots.txt, unfortunatly.

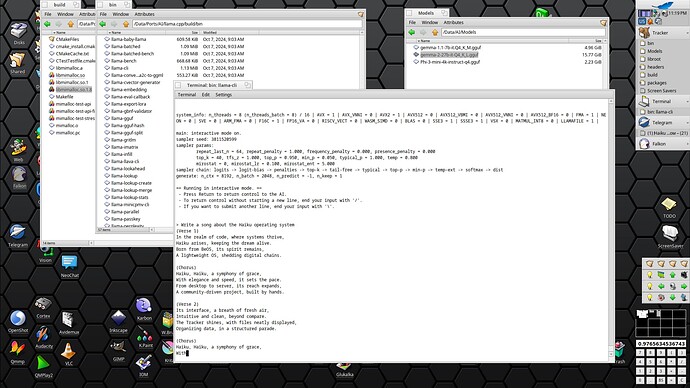

Nothing, huh? ![]() I have launched without any problems (but I had to use mimalloc allocator instead of system one) such models as Phi-3, Gemma-1.1-7b and even Gemma-2-27b. Everything is quite usable and works fine on Ryzen7 with 32 GB RAM.

I have launched without any problems (but I had to use mimalloc allocator instead of system one) such models as Phi-3, Gemma-1.1-7b and even Gemma-2-27b. Everything is quite usable and works fine on Ryzen7 with 32 GB RAM.

Good day,

That AI thingy is nice. I would appreciate AI give us some nice Haiku API bindings for Python, Nim, … That would also be a nice use of AI … ![]()

![]()

Regards,

RR

Haiku API bindings for Python already exist: GitHub - coolcoder613eb/Haiku-PyAPI: Python bindings for the Haiku API

It’s not complete yet,but it has enough features to build simple apps based on it and it’s still actively developed so more features will likely come in the future.

Well you do have far better hardware then me, I did say unusable, not that they didn’t work. Will try it with the ones you mentioned though! Also how do you use mimalloc?

Via LD_PRELOAD.

For exmaple: LD_PRELOAD=/Data/Ports/Alloc/mimalloc/build/libmimalloc.so.1.8 llama-cli -m /Data/AI/Models/gemma-2-27b-it-Q4_K_L.gguf -cnv --chat-template gemma

Small models like 7b do not need very fast hardware. GPU is something you need for larger models.

It does, actually. It’s buried deep in the Terms of Service:

3. User Content License

User contributions are licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License. Without limiting any of those representations or warranties, Haiku has the right (though not the obligation) to, in Haiku’s sole discretion (i) refuse or remove any content that, in Haiku’s reasonable opinion, violates any Haiku policy or is in any way harmful or objectionable, or (ii) terminate or deny access to and use of the Website to any individual or entity for any reason, in Haiku’s sole discretion. Haiku will have no obligation to provide a refund of any amounts previously paid.