Good day,

I was trying a different approach, but haven’t succeeded yet. All just Python stuff, and found, as others, the issues with PyPi packages installation through pip, where many related packages are not able to be installed. Some,like numpy, are available in HaikuDepot, but others not. Usually, what I get when I try to install some Python modules related to this is:

ERROR: Could not find a version that satisfies the requirement ‘put module name here’ (from versions: none)

ERROR: No matching distribution found for ‘put module name here’

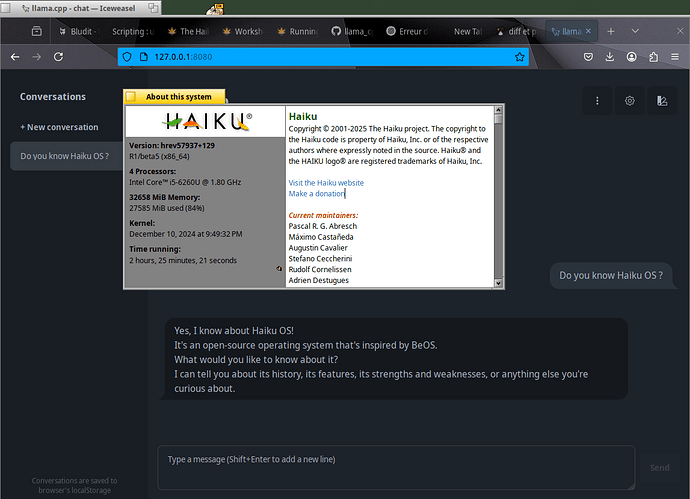

I installed “GPT4All” through pip without issues. GPT4All is meant to be used to run LLMs locally, without internet connection, thus not sharing any info to anyone. Privacy first. They have a standalone soft available for Win, Mac and Ubuntu linux, that I can run on Arch without issues, also on Fedora. They offer a selection of LLMs to download directly from the app, or you can download another LLM from HuggingFace and check if it works.

There is also LMStudio, another standalone app to run LLMs locally without internet connection, but I haven’t tried it even in Linux.

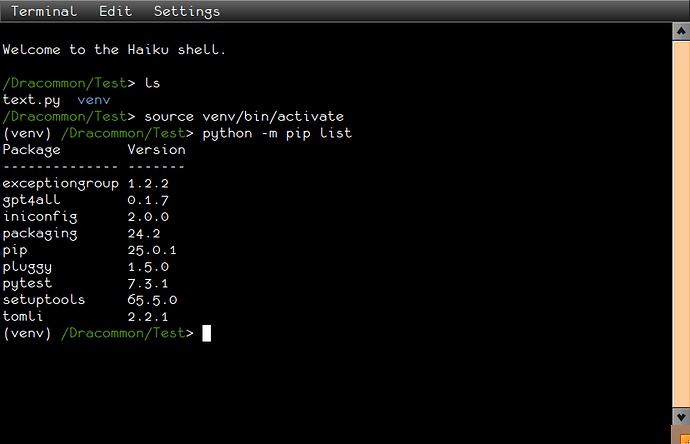

Back to >python -m pip install gpt4all inside a virtual environment, installation worked:

It doesn’t work yet though. Actually, if fails to download the LLM, but this issue is related to libraries, which I haven’t solved yet. First issue was that the Operating System was not supported. This I solved adding Haiku to the pyllmodel.py file inside the virtual environment:

> def get_c_shared_lib_extension():

> if system == "Darwin":

> return "dylib"

> elif system == "Linux":

> return "so"

> elif system == "Windows":

> return "dll"

> elif system =="Haiku":

> return "so"

> else:

> raise Exception("Operating System not supported")

And this is the simple attempt I’m running:

> from gpt4all import GPT4All

> model = GPT4All("Meta-Llama-3-8B-Instruct.Q4_0.gguf")

> # downloads / loads a\4.66GB LLM

> with model.chat_session():

> print(model.generate(

> "How can I run LLMs efficiently on my laptop?", max_tokens=1024))

The issue arises when trying to download the model. I haven’t had much time to deal with it yet. So I’ll let you know if I get any further with it.

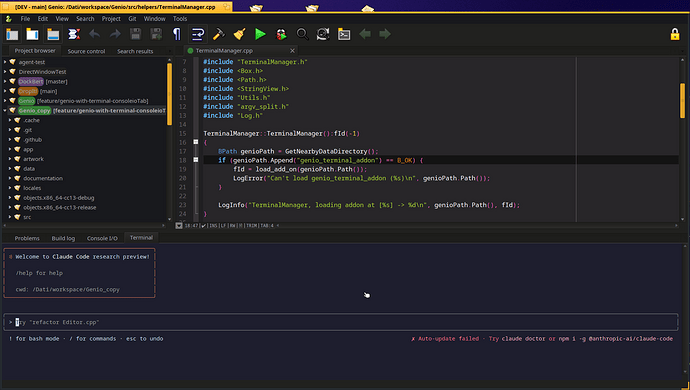

I like the idea proposed by @Nexus-6 of having an assistant in Genio, though I must say I never got Genio to work yet to try it with Python and Nim.

The more options we have, the better.

Regards,

RR