Dude, I am a college student with other obligations besides Haiku. I probably already spend too much time working on it (as the rather onesidedness of the last progress report showed…) Just asking for “when is it coming?” or “can you work on this?” is not helpful. I want the feature as much (or more) than you do; I’m motivated without others asking me to be.

Still, getting nicknamed “batman” is kinda cool

Yes, see above remark. The changes I’ve been doing for Haiku over the past few weeks were mostly “I have an hour to relax, let’s do some small stuff,” and not time to work on something large like USB WiFi or 3D acceleration.

I will be unbelievably busy between now and the end of the semester (mid-December), but then I have all of winter break (~1 month) with nothing on my plate…

Oh, there’ll be something before then, I think.

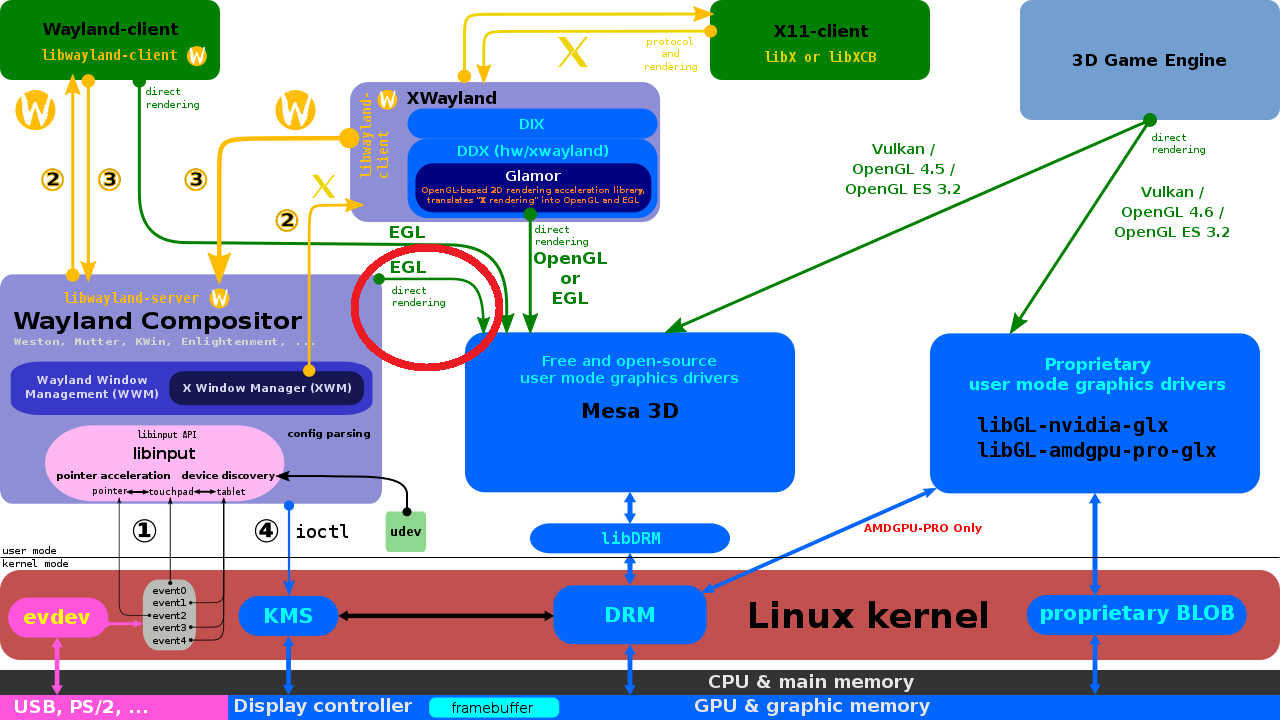

I did get some time in to work on DRM driver porting after the beta was released – I investigated what porting FreeBSD’s Linux compatibility layer (on top of our existing FreeBSD compatibility layer) would be like, and actually got a significant chunk of it to compile, but that was with a ton of hacks and stub headers added to our compatibility layer, and there were a lot of problems that I realized I just wasn’t going to be able to solve effectively without modifying both compatibility layers substantially, and there were some issues relating to memory management that I’m not sure were solvable at all. FreeBSD’s and Linux’s and Haiku’s memory management systems are not so fundamentally different, but they are different enough that running a Linux compatibility layer on top of a FreeBSD compatibility layer on top of a Haiku compatibility layer … well, you get the idea.

My next steps will be trying to use DragonFlyBSD’s DRM drivers or OpenBSD’s. Their APIs have diverged significantly from FreeBSD’s, though, so it may be the case that modifying our compatibility layer to work with them is more work than writing a Linux compatibility layer from scratch … in which case I may wind up just doing that; and if so, it will mean a much more (months) development time required than these routes. So I really just don’t know what will happen, at this point.

But one benefit that did come from the otherwise-abandoned modifications to the FreeBSD layer was the fixes to performance & battery life issues last week, as well as the addition of 10Gb Ethernet drivers from the week before. So it wasn’t for nothing.

it is the only thing important as i think that haiku lack right now.

it is the only thing important as i think that haiku lack right now.