Not adding much to the conversation, but I am just now getting around to play with it. I pasted some of our code in just to see what it came back with. Pretty cool!

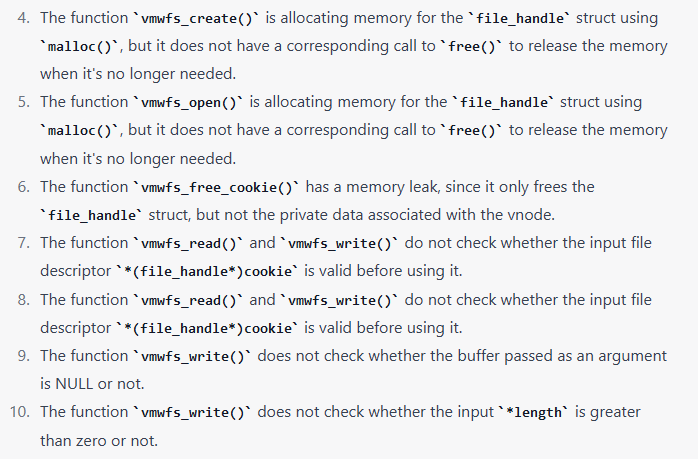

Which part of the code is this?, it seems like it caught 2 memory leaks? is this thing for real?

It was from one of the vmware_fs files from the vmware addons for Haiku. No idea if its findings are legit or not.

typically, it is only correct in the context of the code you can share, I often tell it I am pasting several times and I start a new conversation. So I tell it I am pasting the code in order every 300 lines, and ask it to wait until I finish to reply, it will sometimes oblige, you have to remind it with each paste to wait though. Yeah it is a amazing debugging tool but it doe’s get it wrong , but only becuase of the limited context, I put my name in the waiting list for the professional version, I am excited, I am going to get some much shit done !!

No.

The “vmwfs_create” function is designed to allocate memory, so it calls malloc and not free. The “vmwfs_free” function is designed to free that memory, so it calls free and not malloc. This is not at all a memory leak.

As usual, ChatGPT will reply with something that looks like it would make sense at first glance, but it is just copypasted from random answers to similar questions on the internet. So, use the real thing: get one of the static analyzers that actually scan the code and understand some of it… or just ask some human to review your code, they will do a better job of it ![]()

Yeah i kinda had my doubts about it, use the real thing indeed.

actually chatgpt isn’t wrong, it’s just not aware of the entire context.

if i give you a small portion of a large problem, your knowledge is limited to a small portion, but if you’re unaware of the larger set of variables, you’re going to draw incorrect conclusions. you can observe this phenomenon everywhere in human society. it’s not limited to chat gpt.

the trick to working with chat gpt is getting enough of the problem into the conversation and then engagement into the conversation. so if chat got says, this isn’t being free’d, check, and if it is, share the code snippet and correct it. it does learn in the context of a conversation.

I’ve been working with it to design a more robust kallman filtering velocity and position predicting encoder scheme for CNC machine position detection. that conversation is probably several hundred reply’s long at this point .

point is gigo, you have to feed it to get ot to work

Does it remember"learn" between conversations or just within one?

ChatGPT had more context than me: it had the sourcecode for these functions, and I can understand what it got wrong just by looking at the function names. Yet it guessed wrong.

At what point does it become faster to just do the research and write the code yourself? I don’t think what I typically need in my workflow is some tool to give me very fast, but often incorrect or inaccurate answers, that I then need to “correct” by talking with a robot. But maybe I’m old and grumpy and not very receptive to new technology.

it does remember conversational context.

i suspect that your grumpy and old, and my hands don’t work as good as they once did, so chat gpt sparing my hands endless boiler plate and algorithm typing is a huge benefit. I still wind up working on the control logic but i can give it some code and give it instructions to create specific equations, and it typically does this exceedingly well.

i have early symptoms of carpal tunnel, and typing is really bothersome to my hands.

it’s a tool like a screw driver, and requires learning to extract maximum benefit. i use it as a assistant, part Dictaphone part logic analyzer.

if you tell it your working with the be api, and keep reminding it you’re working with Haiku, eventually it commits this to memory and accuracy improves greatly.

Sounds like working with someone that hasl alsheumers diesease .-.

Honestly I’d rather not use a tool I have to train like a dog to behave. I don’t think I am a particularily good dog trainer. With valgrind I atleast know that valgrind knows mostly what it is talking about.

If I understood well, these tools are used for code generation and this can indeed cause problems. The one discussed on this thread is used for debugging so it shouldn’t be impacted.

You can ask it to write code as well, even if that’s not its main purpose. And it’s even less clear what it was trained on for that, the training set is basically “a copy of the whole internet”. So, who knows what bugs and licensing violations it will get from there?

i can’t wait for the copyright violations to fly, because you can’t copyright math and the fact that code has been allowed to have patents is ridiculous, as well as copyrighted.

if 2 people both solve the same problem, the odds of the code being similar are very high. there’s really only so many ways to perform math.

at the high level language level, yeah, you could probably make some artistic case argument, but at the compiled binary level, meh.

you can actually get chat gpt to generate original code compositions, same as humans do.

You’ re all forgetting the smell of money - which can sway the legal system in some cases. Big techs & institutions are getting into AI bots now:

- OpenAI - ChatGPT

- Google - Bard

- Microsoft - Bing + AI

- Alibaba - AI soon

- Linux - Gnome + ChatGPT

- Uni Education - safe-to-fail AI project

the ridiculous nature of patenting math is the corr issue.

maybe Gpt chat is the source of skynet and terminator。

maybe Gpt chat is the source of skynet and terminator。

You could be right. It might not be funny if it happens that way. I’m not a friend of inventors of AI to start with.

Personally, I think Elon Musk’s desire to make a cybernetic implant with a modem is even more dangerous.

In all, tech is becoming more dishonest by the minute.